Towards automated and smart interactions with Android apps

In previous posts we have talked about the types of tests that can be carried out in Android apps, and where/how can they be developed. We also introduced Android Studio and its benefits thanks to other native tools integrated in the SDK (Software Development Kit).

One of those native tools is Exerciser Monkey, which is an automated tool that interacts with Android apps by conducting pseudorandom events.

Nevertheless, it is not the only tool available for this purpose. Many others have been developed to perform smart interactions with the apps e.g. by using a model-based scheme of operation. By smart we mean a more exhaustive interaction that can’t be achieved generating pseudorandom events. With it, we should be able to traverse through every activity of an app by e.g., clicking on all the UI elements.

The benefit of model-based tools is its improvement in code coverage thanks to their extended capacities for filling test fields or log in to the app; what is impossible for a pseudorandom tool.

The state of the art reports some alternative model-based tools. Unfortunately, when we tried to implement any of them e.g., UIHarvester we soon realized part of the source code in charge of the automated interaction with the displayed elements was not accessible. Instead, this tool as well as others found, provides the generation of an app model, which can be the basis for the subsequent development of the interaction algorithm.

For this matter, we also had problems with the emulator used, because Android Emulator doesn’t allow installing frameworks e.g., Xposed Framework, which is necessary to use some of the model-based automated interaction tools found. This problem is due to the architecture of the emulator in itself, which have no recovery mode, and it is essential for installing such frameworks.

Developing an alternative tool from scratch.

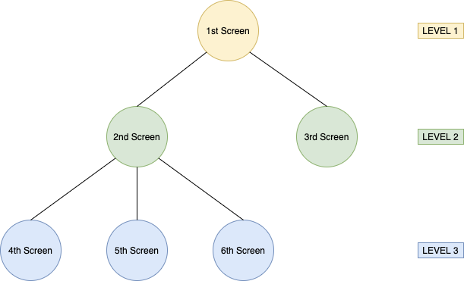

Due to all this, we decided to develop a tool capable of automatically interacting with any Android app by finding its UI elements and interacting with them by clicking or swiping to reach all the screens of each application. The traversal is based on DFS (Depth First Search) algorithm, this means it tries to find the deepest node (app screen) before visiting any other node of the app.

Illustration 1: Tree diagram of an app.

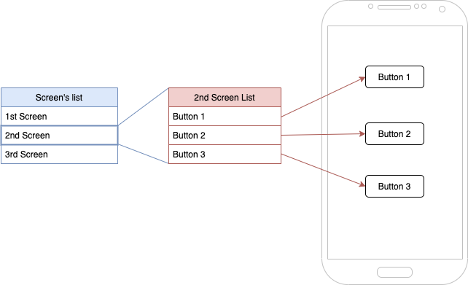

In order to create an automated testing tool for third-party apps, a black-box approach is needed, and to achieve it, we made use of UI Automator framework to find the displayed elements. Once they were identified, were then saved on lists in order to memorize if a screen have been visited or not, by relating those elements to a specific screen. Those elements can be unequivocally identified by their hash, and afterwards, comparing each list we can know if that node or screen have already been visited or not.

So, the structure (illustration 2) is made by a list which represent the whole diagram of the app and contains other lists in it. Those lists identify a specific screen and contains the UI elements which we will interact with.

Illustration 2: Structure of lists to memorize app’s screens and their UI elements.

A variable to know the depth of the current screen in the diagram is needed to implement the DFS algorithm. We didn’t turn to a library for that purpose because it would take longer to adapt it to our necessities than developing it from the beginning.

Testing our development.

It was time to validate the correct implementation of our development and its behavior compared to the DFS algorithm on which it is based. Firstly, a controlled environment was used to test it, formed by an Android Emulator device running API 22 and a controller (macOS). The emulator allowed us to see graphically how the interaction was performing, and once we verified it was working as expected we could proceed to test it on a real environment.

Comparing results with Exerciser Monkey.

The real environment is formed by an Ubuntu virtual machine as controller and a physical device “Redmi 8” running Android 9. The controller includes a traffic analysis module to intercept external apps. For our analysis, we downloaded and used our new tool on 156 random apps, and then we repeated the process on the same apps but this time using Exerciser Monkey. Even though Exerciser Monkey triggered more communications on most of the apps, our new tool triggered a bigger number of communications on 42 of the 156 apps.

Nevertheless, the total number is not the only important metric. As we are interacting with apps to identify their privacy leaks the number and type of data leaked trough these communications is even more important. Using this as a metric, we observed similar results from both tools, but with an obvious benefit from our new tool in time saving, what is an important factor that must be considered.

In addition, we are planning to introduce some new features in a future work. Specifically, an automated log in for applications that require it, what would dramatically improve the new tool’s effectiveness over Exerciser Monkey, which can’t implement it.

![]() Towards automated and smart interactions with Android apps por davidrtorrado está licenciado bajo una Licencia Creative Commons Atribución-NoComercial-SinDerivar 4.0 Internacional.

Towards automated and smart interactions with Android apps por davidrtorrado está licenciado bajo una Licencia Creative Commons Atribución-NoComercial-SinDerivar 4.0 Internacional.