From Docker to Kubernetes: Starting with orchestration

2020 has ben a year full of migrations due to COVID-19 and the mobility restrictions. Many organizations have been forced to migrate to the cloud. This has been the case in out project. In order to adapt to the changes, our team has had to perform a migration of a local distributed platformto a cloud environment. This migration has caused a more important one, the migration from Docker to Kubernetes. In this post I would like to talk about that process.

At the end of March, when we realized that we couldn’t go back to our laboratory for a while, we decided to move our project to the cloud so we could work remotely and coordinated. After considering different options, we decided to work with Google Cloud Platform (GCP).

GCP has tens of very useful tools with simple user interfaces and lots of documentation. In our local deployment we worked with a set of microservices, each one running in an independent Docker container. When we started with GCP we found Google Kubernetes Engine (GKE), one of the GCP tools which offers a secure and managed Kubernetes service, so we decided it was the perfect timing to start working on orchestration.

When working with Docker, you must focus your time and effort on manual tasks such as run and stop containers, reload them in case of failures, manage CPU and memory, etcetera. If the number of containers gets bigger, these tasks become much more complicated and need automation. An orchestrator is a process that automates and manages different containers and how they interact with each other.

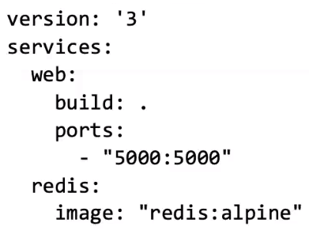

The intermediate step between Docker and an orchestration like Kubernetes is Docker Compose, a tool that simplifies the use of Docker through a set of configuration/deployment files telling the Docker Engine what it must do. A docker-compose file is written in YAML and has this appearance:

The first line refers to the Docker Compose version that we are using. Secondly, we include the section services. Each element in this section is a container, the first line is its name. We could build a container from a Dockerfile or use an existing image. If this kind of files are understood, it will not be difficult start with Kubernetes, which is based on “.yaml” files.

The main component at Kubernetes is the cluster. A Kubernetes cluster consists of a set of worker machines, called nodes that run containerized applications. There is at least one worker node in every cluster. The node hosts the pods, which are the components of the application workload. In a simpler way, a pod is a group of one or more containers with shared storage.

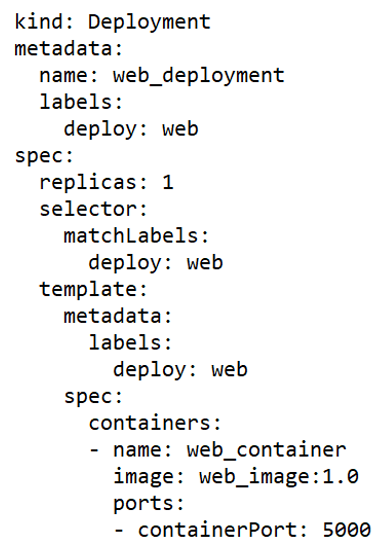

The next component is the replicaset, its function is to create and maintain a group of identical pods. There is a high-level concept, the deployment, which manages the replicaset. Kubernetes developers recommend not to work with the replicaset but to work with deployments. The typical workflow in Kubernetes consists on defining a deployment in a yaml file in which we specify the features and the number of pods that we want to run. This file should be similar to this:

When we execute this file with the next command:

>> kubectl create -f deployment.yaml

Kubernetes automatically creates a deployment, a replicaset and a pod.

In the field kind we set the element that we want to create, and we give it a name in the metadata section. In the spec section we specify the template of our pod and the number of replicas that we want to run. In the last section, containers, we can add all the containers that we want to deploy in each pod, typically one container per pod.

From this point there are multiple choices to set up your deployment, you can create a volume with the persistent-volume component, create a service, expose ports, set environment variables with a configmap or with a secret in case of passwords, obtain public IP addresses… In essence, we can create an environment that satisfies all our needs.

To sum up, starting with Kubernetes for a cloud deployment may seem too complicated but it has huge advantages. My advice is to start with Kubernetes when working with a medium number of containers, no more than a dozen, learn how to use it, feel comfortable with it, and then take advantage of all the features when the number of containers grows.

![]() From Docker to Kubernetes: Starting with orchestration por anadiezm está licenciado bajo una Licencia Creative Commons Atribución-NoComercial-SinDerivar 4.0 Internacional.

From Docker to Kubernetes: Starting with orchestration por anadiezm está licenciado bajo una Licencia Creative Commons Atribución-NoComercial-SinDerivar 4.0 Internacional.