Landing on UGV

In our department we have been investigating in the last years how to deploy and use Unmanned Ground Vehicles (UGVs) in disaster scenarios, in which a human being, searching for survivors, might be at risk [1]. Frequently, the orography is complex (take as example a city after an earthquake), there are obstacles on the terrain or an aerial point of view is required. In those cases an Unmanned Aerial Vehicle (UAV) is probably needed, although its lack of range, endurance and payload capability are important drawbacks.

This is why teams of heterogeneous robots (a UGV and a UAV) might be a suitable solution [2], taking advantage of the properties of both robots. To improve the endurance of the UAV, the UGV can carry it and supply energy, whereas the UAV takes-off to overcome terrain obstacles and to provide a point of view from above.

One of our degree student coworkers, Pablo Rodriguez Palafox, has been working on developing an autonomous system to take-off from the UGV, track and follow it, and finally land on it. His work was based on previous investigations carried out also in our department [3].He presented his work as Bachelor Thesis. His work is a first stage to develop a more complex and autonomous system, that our group will use not only in disaster scenarios, but also in other tasks we are working on, such as in environmental variables monitoring in greenhouses [4].

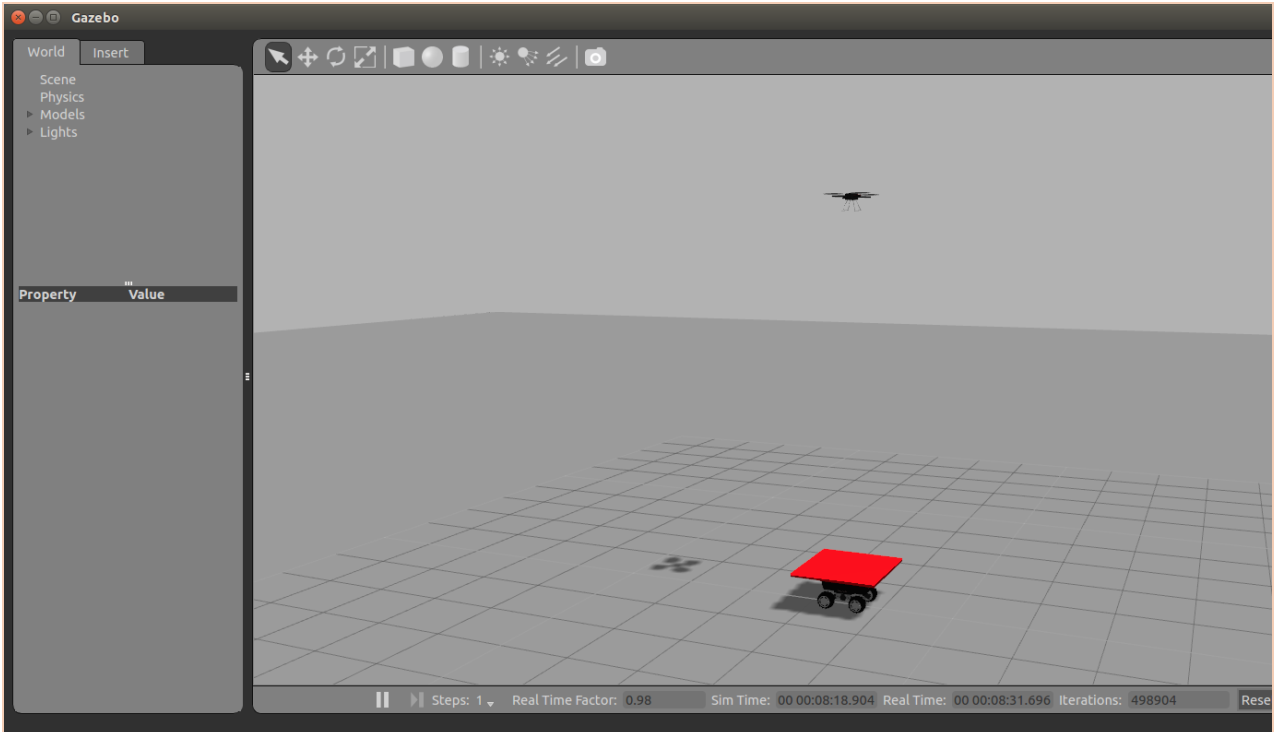

He used an AR. Drone 2.0 as UAV and a Robotnik Summit XL as UGV, on which he placed a red landing platform. Using Open CV libraries, we was able to detect that red platform and locate its centroid. To control the following and landing process, he used firstly an adaptive PID controller, and afterwards he improved it using a Kalman filter to predict the future position of the UGV. He also included a recobery mode, in which if the quadcopter fails to land or loses the platform, flies up and tries to locate again the UGV.

The algorithms were tested in Gazebo and finally in the real platforms. He achieved remarkable results, with a very low failure rate.

As future lines of development, we consider using a more advanced UAV available in the department, such as the AscTec Pelican, equipped with a camera with better resolution. We will be then able to use different visual markers for the landing platform, in order to achieve a more accurate relative positioning.

This video shows the results of the test carried out:

[1] – Murphy, R. R. (2014). Disaster robotics. MIT press.

[2] – Balakirsky, S., Carpin, S., Kleiner, A., Lewis, M., Visser, A., Wang, J., & Ziparo, V. A. (2007). Towards heterogeneous robot teams for disaster mitigation: Results and performance metrics from robocup rescue. Journal of Field Robotics, 24(11‐12), 943-967.

[3] – M. Garzon I. Baira and A. Barrientos. Detecting, Localizing and Following Dynamic Objects with a Mini-UAV. RoboCity16 Open Conference on Future Trends in Robotics, May 2016. III, 2.

[4] – Roldán, J. J., Garcia-Aunon, P., Garzón, M., de León, J., del Cerro, J., & Barrientos, A. (2016). Heterogeneous Multi-Robot System for Mapping Environmental Variables of Greenhouses. Sensors, 16(7), 1018.

About Jaime Del Cerro

Sistema posicionamiento en interiores

Ya casi tenemos terminado el sistema de posicionamiento indoor con un optitrack. Los últimos soportes están listos para ser instalados.

Pronto podremos volar varios drones a la vez en una pequeña habitación.

Comentarios recientes